Scaling WooCommerce talk at WooCommerce London on 23rd May 2016

Let me start by expressing my shock:

- Slideshare is owned by LinkedIn

- Slideshare has no idea what to do with keynote files

Hence this blog post. You can grab my slides by clicking this very link (it will open a dropbox link in this window, but will not begin downloading automatically).

Now the narration to go along with it.

The event and

Lately we’ve been dealing with a number of support tickets where the main concerns were that above a certain size the site slows down, and it’s the fault of $plugin. Usually ours are blamed, or maybe because we see those tickets. The meetup is also focused on Scaling with Edmund Turbing speaking about WP Engine and their setup and some of their customers’ stories.

Inception

You have a business idea, and you want to take it to market fast. You have an eCommerce idea in mind, and because WordPress and WooCommerce are both free and fairly easy to set up, you decide to go with it. Initially you don’t think you’re going to amass huge traffic, so you decide to take out a $5 a month hosting plan, which I chose to illustrate with a Raspberry Pi Zero.

Your first customers come in, and the Zero serves them happily. Then more come, then even more. The Zero is struggling. And then more people come, and then the Zero just gives up. It can’t handle that.

What happened?

The Pi Zero was overwhelmed. It didn’t have the muscle to handle to workload. There are two ways to remedy that: either lower the workload, or gain muscle.

Imagine Walmart (or Tesco). Your aim there is to move as many customers through the checkout positions as possible in a given timeframe, because serving 1000 customers is more $$$ than serving only 100.

In order to do that, you need a high throughput. Throughput is the function of two things in that instance:

- number of lanes open

- speed with which those lanes handle individual customers

Of course the store can also have so many people inside at any given time. The bigger the store, the more people. The more people you have in store, the harder it is for folk to move around there, and everything kind of slows down.

To maximise your throughput here, you can do the following:

- open more lanes

- train your checkout staff to work more efficiently / faster

- have equipment that lends itself to high speed scanning / payment taking

- have less people in your store at any given time

Of course people not IN the store can still browse your store by the magic that are catalogs

To turn these into server speak:

- bigger / more CPU - faster work at checkout

- more memory - more lanes open (also a function of CPU too), plus bigger store to have more folk in store at once

- caching - have your people take home a catalogue, and browse that instead of your site

Scaling segue

When we talk about scaling resources, there are two directions you can go: up and out.

Scaling up: replace your Pi Zero with something more beefy: a Pi 3, an hp server in a rack somewhere.

Scaling out: add more of the same servers: have 15 hp servers in the rack

Will my site be faster if I throw more hardware at it?

That is the ultimate question, and the answer is: maybe. If the software is suboptimal, or not set out to do things at scale (I’ll get to this in a sec), then no, throwing more hardware at the problem won’t help you.

A common misconception about WordPress and WooCommerce is that they both scale out of the box. They don’t, you need to sacrifice time, money, and developers on making that happen; there’s no way around it.

Please don’t sacrifice developers at altars though. They don’t tend to like you very much after that.

Charts!

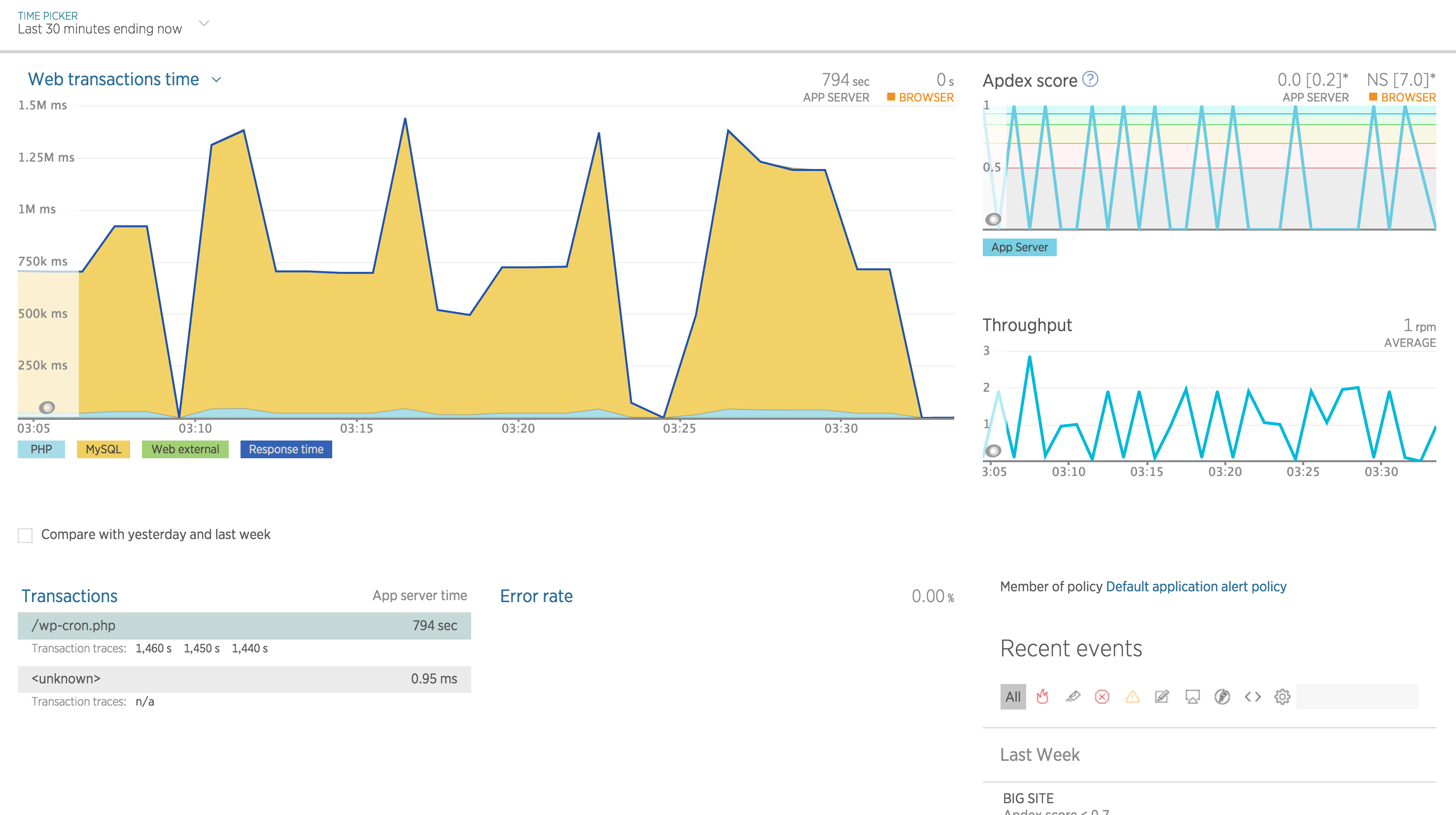

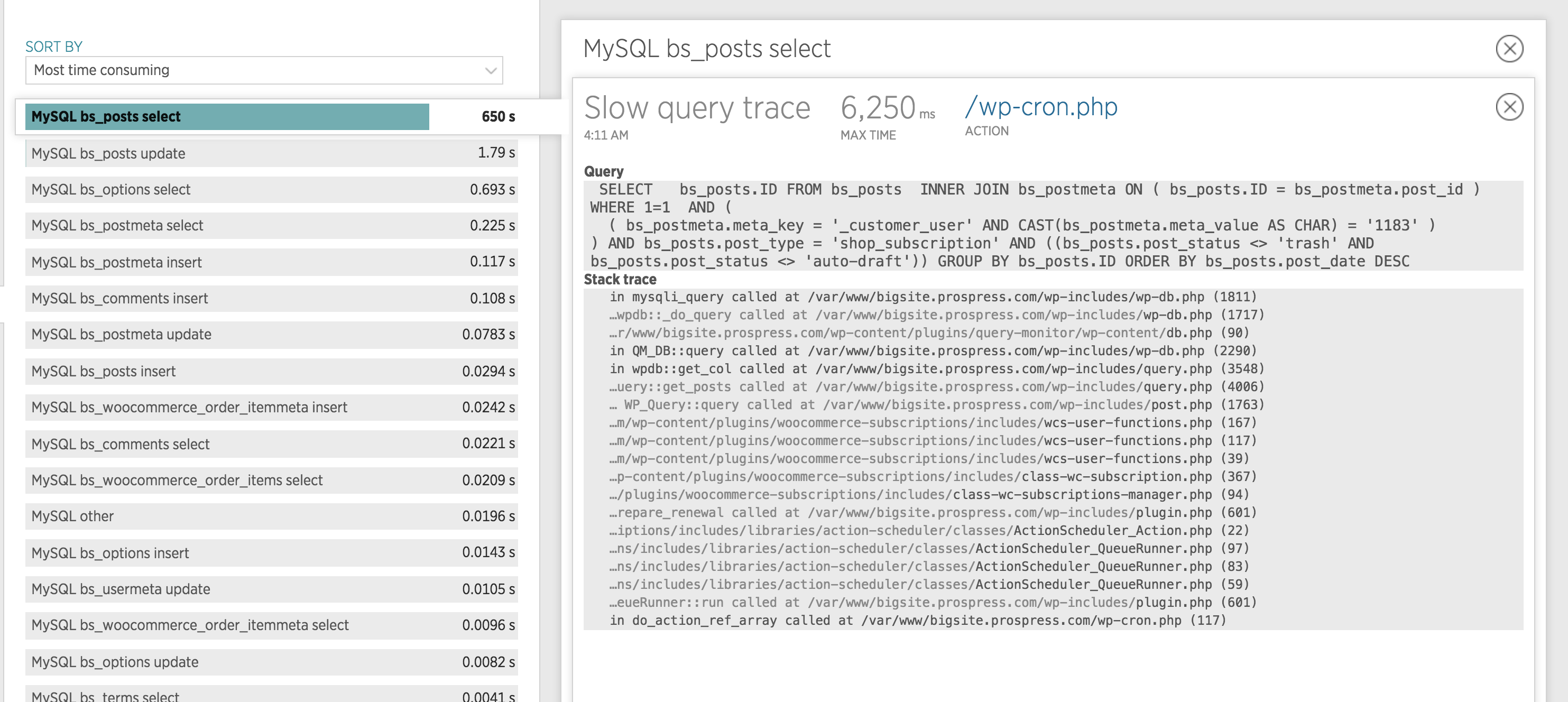

Let’s consider this wee image from New Relic taken of our own testing server where we have 100,000 subscriptions renewing (trying to) each week:

Here’s what’s wrong with the site according to data there:

- the big yellow blob means that the server is spending disproportionately more time dealing with MySQL than literally everything else

- the Appdex score (top right) frequently reaches 0. When it reaches 0, it means site’s users are frustrated. Appdex = number of satisfied users / total number of requests. "What portion of requests get filled within some time limit?" If zero, none of them. When Appdex is zero, the site’s bricked. See https://docs.newrelic.com/docs/apm/new-relic-apm/apdex/apdex-measuring-user-satisfaction.

- throughput is measly low (below Appdex score)

- time it takes for

wp-cronis near 800 seconds, or a bit more than 13 minutes

Throwing more hardware at THAT one won’t help much. Plus we’ve been running that on a 32 GB memory with 12 cores, and it was still struggling because MySQL. We scaled it back to 8 GB memory and 4 cores without impacting performance.

This is where the term engineering comes in, and that’s why you pay your developers: to solve these issues.

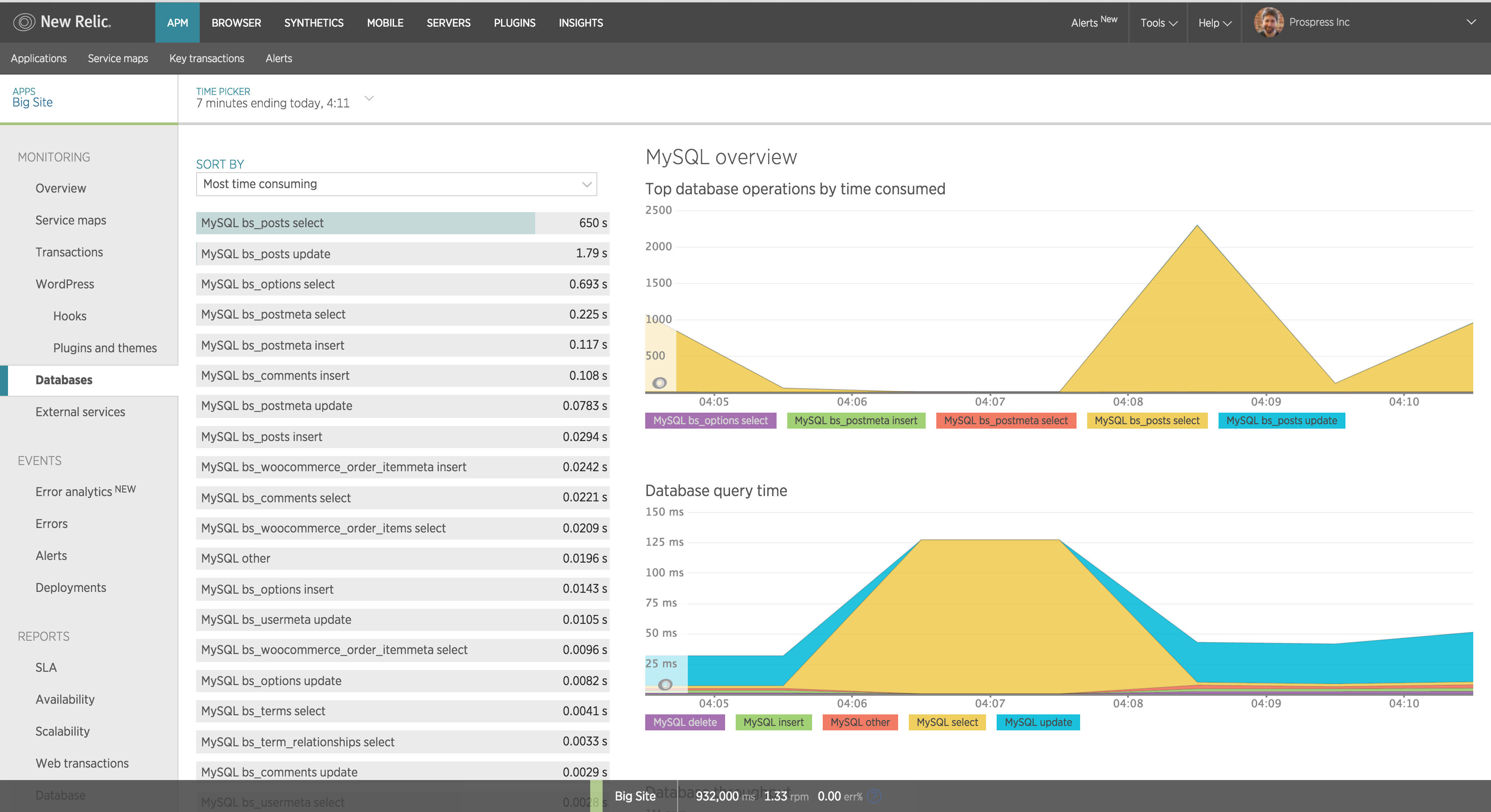

The biggest bottleneck is the MySQL. What we need to find out:

- what is causing that bottleneck?

- can we do anything about it?

Next slide:

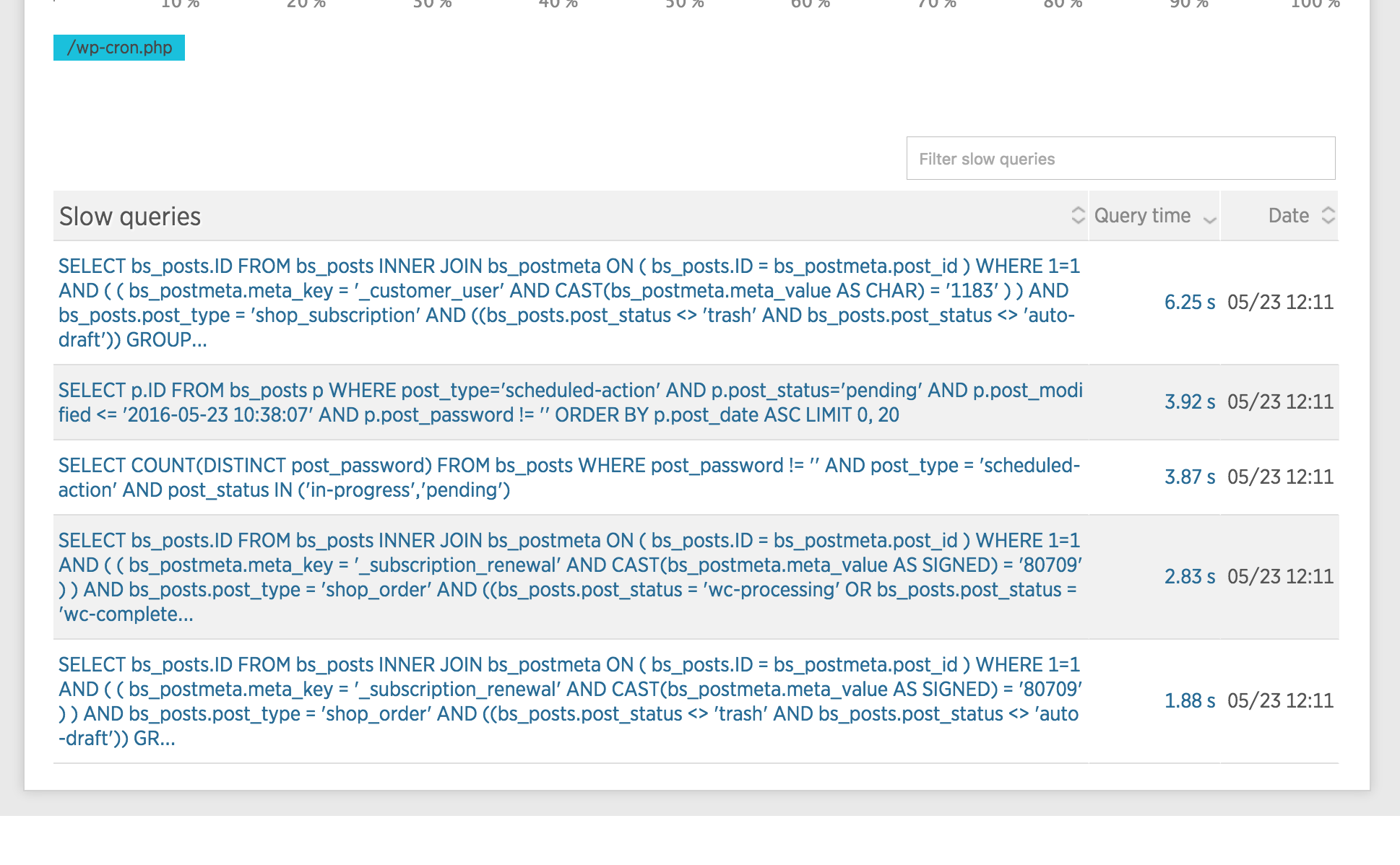

Filtering for time and clicking into the database operations yields the above chart. It’s a SELECT on the posts table. Clicking on that:

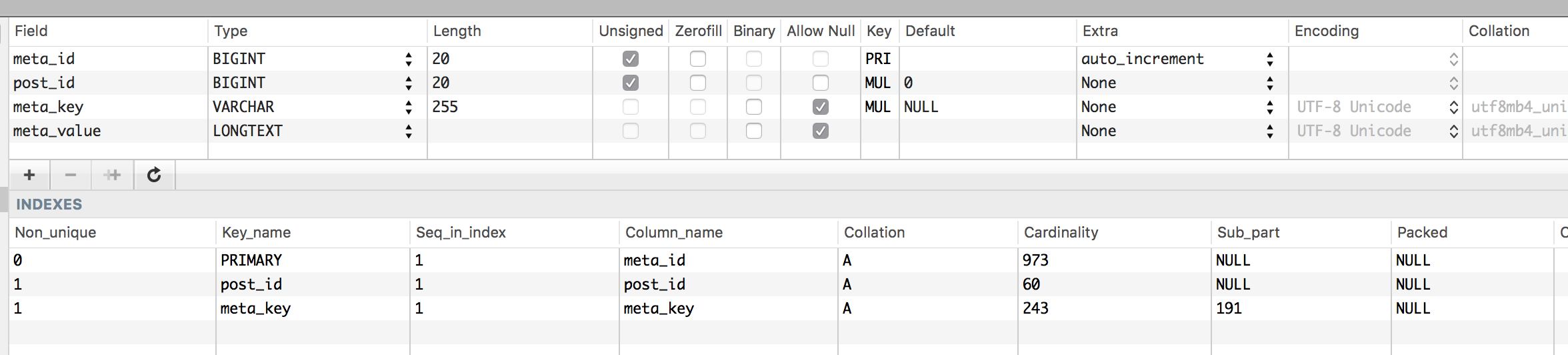

Note that the query time is only 6.25 seconds, but because it runs 100 times, that adds up to the 650 seconds seen above. Drilling into it some more (clicking on the query itself):

It’s a fairly large postmeta table, and it needs to search by meta_value, which is not indexed, and meta_key is only partially indexed:

Indices!

Why are they important?

This is your library:

Your task is to find all the books by Isaac Asimov. The books are in no particular order. Without indexing, this is what you’d do:

check book 1: Is it by Isaac Asimov? No...

check book 2: is it by IA? No...

check book 3: is it by IA? No...

... one eternity later ...

check book 88321: is it by IA? YES! Let’s grab it, and...

check book 88322: is it by IA? No...

This is your indexing cabinet:

Finding all the books in this case:

walk up to the cabinet

find the drawer for Isaac Asimov

pull out cards with the numbers on them

walk up to the books and get them

You’re done a LOT faster. Sadly searching by meta_value is like the first method, so the bigger your postmeta table gets, the longer it takes, which is like the opposite of scaling.

Can your developer find solutions to these? Can they work around some of these limitations?

Things you can do

Create indexed helper tables to help data retrieval

Subscriptions is using a temporary table to query by last payment date. ~2 hour query => 900ms. GHOST_URL/2016/02/22/how-i-improved-a-query-for-our-plugin-by-several-orders-of-magnitude/

Patrick Garman of ColourPop has an order => customer_id helper table that sped things up considerably.

Subscriptions 2.1 is adding 2 more tables for dates and related orders. 40 seconds for 1 query on a big site vs 26 seconds to create ALL new tables (with indices), and about 3ms to query for the same date thing.

Easy Digital Downloads is moving there: https://pippinsplugins.com/resolving-poor-data-schema-designs/.

So is WooCommerce: https://github.com/woothemes/woocommerce/issues/10071.

Disable / dequeue the ajax cart fragment.

Live cart means live data. Live data means no cache. No cache means unhappy servers. Rip.

Eliminate unnecessary code execution

When creating a subscription through the REST API, the site was calling a hook 4 times (to add customer data to the API response). 3 times it was unnecessary to do so, and it took a long time. The API call was 67 seconds on my local computer using customer’s database. Got it down to 17 sec.

(still a lot, but that’s where the order => customer_id table comes in)

Make sure the site runs without errors / notices

Okay, this is going to be super controversial, but hear me out.

Make sure the site runs without errors / notices

- Turn on debugging and debug logging.

- Let the site function for a day / week.

- Look at the debug.log file.

- If it’s not empty, solve the issues that produce the entries first!

- Entries will likely cause bugs.

If the site you’ve built has a non-empty debug.log, fix it. Talk to the plugin developers. Reach into the code, fix it for them, it‘s all GPL anyways. And they’ll love you for it. Or not, but then don’t use their plugin.

But no one is paying me to fix someone else’s code!

No, but you’re paid (hopefully) to make sure your clients’ sites are top notch. But seriously, send the devs an email / pull request / open an issue.

When to upgrade hosting?

Cost-benefit analysis. If you’re on a “cheap” host (less than $50 a month, shared hosting), then first step is to move to a low tier VPS.

If you struggle on a low tier VPS, move to a mid-tier VPS, talk to your host about providing analytics, and work with a developer to dissect the data and fix things.

If you’ve grown that out, move to a high-tier VPS and hire another developer as well. At that point you should have the income to support those :).

BUT WAIT THERE’S MORE

Seriously, sign up for New Relic, and start using that if you have a moderately traffic heavy site. It is just so awesome. Here, have a link (non-affiliate. I don’t think they have affiliate things): https://newrelic.com/signup

終わり

owari – "the end"

As usual, any questions, hit me up on the tweeting contraption.